Markov Chain Monte Carlo II

PSYC 573

University of Southern California

February 17, 2022

Markov Chain Monte Carlo II

PSYC 573

University of Southern California

February 17, 2022

What has been useful?

- R command, exercises

Markov Chain Monte Carlo II

PSYC 573

University of Southern California

February 17, 2022

What has been useful?

- R command, exercises

Struggles/Suggestion?

- Download Rmd from website

- Math concepts

- More R code (especially related to the homework)

Markov Chain Monte Carlo II

PSYC 573

University of Southern California

February 17, 2022

What has been useful?

- R command, exercises

Struggles/Suggestion?

- Download Rmd from website

- Math concepts

- More R code (especially related to the homework)

Changes

- Speed up a bit

- Zoom

- More time in R

The original Metropolis (random walk) algorithm allows posterior sampling, without the need to solving the integral

The original Metropolis (random walk) algorithm allows posterior sampling, without the need to solving the integral

However, it is inefficient, especially in high dimension problems (i.e., many parameters)

Data Example

Taking notes with a pen or a keyboard?

Mueller & Oppenheimer (2014, Psych Science)

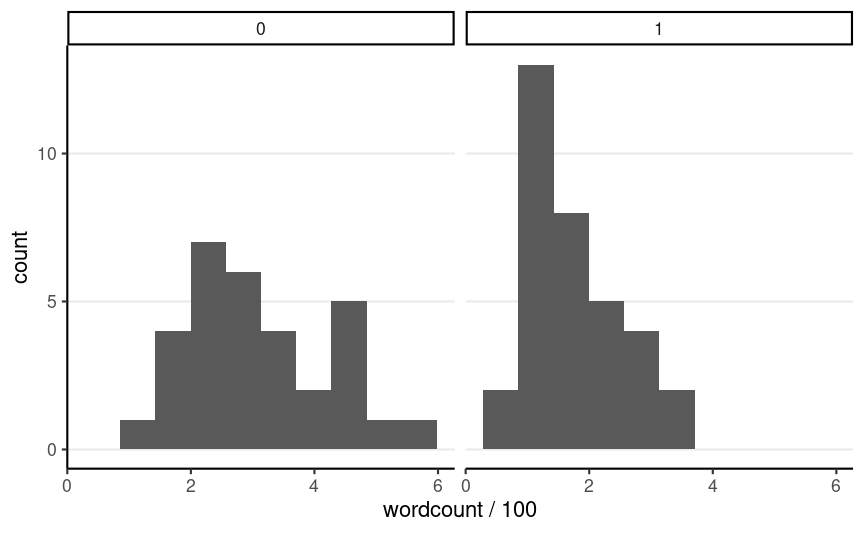

# Use haven::read_sav() to import SPSS datant_dat <- haven::read_sav("https://osf.io/qrs5y/download")0 = laptop, 1 = longhand

| condition | wordcount | objectiveZ | openZ |

|---|---|---|---|

| 0 | 572 | -1.175 | 0.111 |

| 0 | 226 | 0.317 | -0.864 |

| 0 | 255 | -1.175 | -0.376 |

| 0 | 298 | 1.063 | 1.086 |

| 1 | 203 | -1.921 | -0.376 |

| 1 | 127 | -0.056 | 0.111 |

| 1 | 258 | 0.690 | 0.111 |

| 1 | 152 | -0.429 | 0.599 |

Do people write more or less words when asked to use longhand?

Normal model

Consider only the laptop group first

wc_laptopi∼N(μ,σ2) Two parameters: μ (mean), σ2 (variance)

Gibbs Sampling

Gibbs sampling is efficient by generating smart proposed values, using conjugate or semiconjugate priors

Implemented in software like BUGS and JAGS

Gibbs sampling is efficient by generating smart proposed values, using conjugate or semiconjugate priors

Implemented in software like BUGS and JAGS

Useful when:

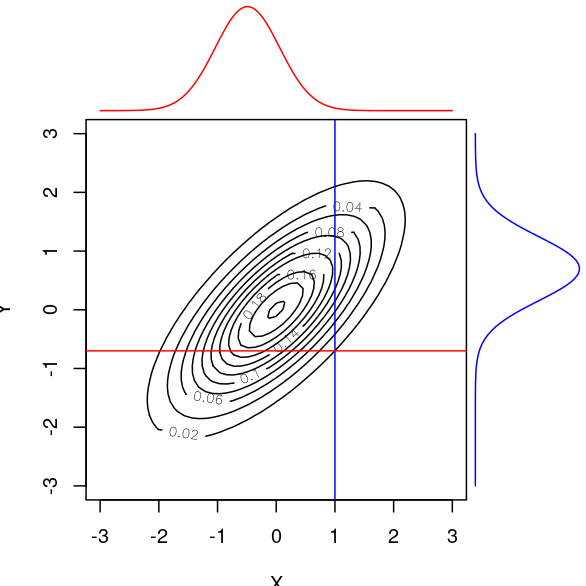

- Joint posterior is intractable, but the conditional distributions are known

Another example

Conjugate priors for conditional distributions

μ∼N(μ0,τ20)σ2∼Inv-Gamma(ν0/2,ν0σ20/2)

- μ0: Prior mean, τ20; Prior variance (i.e., uncertainty) of the mean

- ν0: Prior sample size for the variance; σ20: Prior expectation of the variance

Posterior

μ∣σ2,y∼N(μn,τ2n)σ2∣μ∼Inv-Gamma(νn/2,νnσ2n[μ]/2)

- τ2n=(1τ20+nσ2)−1; μn=τ2n(μ0τ20+n¯yσ2)

- νn=ν0+n; σ2n(μ)=1νn[ν0σ20+(n−1)s2y+∑(¯y−μ)2]

No need for a separate proposal distribution; directly sample the conditional posterior

- Thus, all draws are accepted

No need for a separate proposal distribution; directly sample the conditional posterior

- Thus, all draws are accepted

Posterior Summary

2 chains, 10,000 draws each, half warm-ups

μ0 = 5, σ20 = 1, τ20 = 100, ν0 = 1

| variable | mean | median | sd | mad | q5 | q95 | rhat | ess_bulk | ess_tail |

|---|---|---|---|---|---|---|---|---|---|

| mu | 3.1 | 3.10 | 0.213 | 0.211 | 2.753 | 3.45 | 1 | 9928 | 9936 |

| sigma2 | 1.4 | 1.33 | 0.378 | 0.337 | 0.904 | 2.11 | 1 | 10189 | 10136 |

The ESS is almost as large as # of draws